Table of Contents

At a basic level, nuclear power is the practice of splitting atoms to boil water, turn turbines, and generate electricity.

Principles of nuclear power

Atoms are constructed like miniature solar systems. At the center of the atom is the nucleus; orbiting around it are electrons.

The nucleus is composed of protons and neutrons, very densely packed together. Hydrogen, the lightest element, has one proton; the heaviest natural element, uranium, has 92 protons.

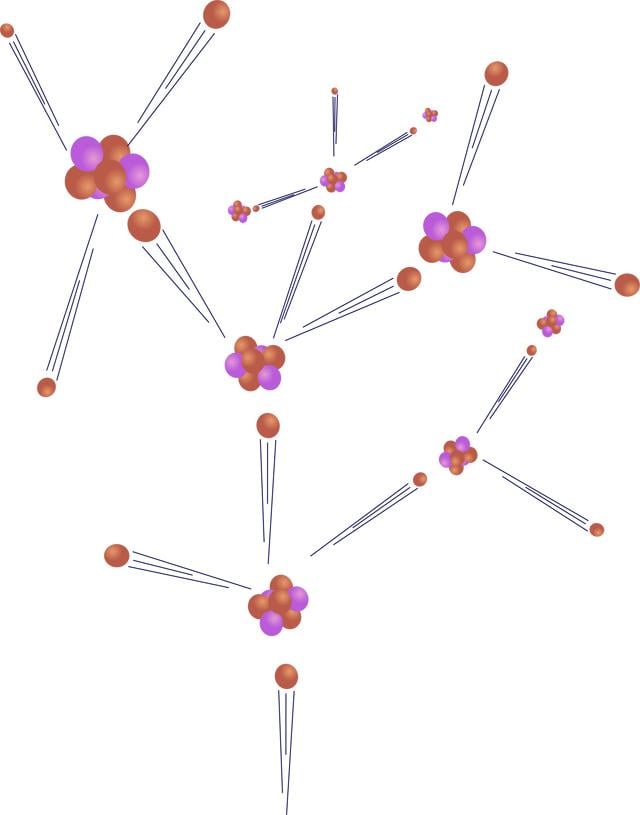

The nucleus of an atom is held together with great force, the "strongest force in nature." When bombarded with a neutron, it can be split apart, a process called fission (pictured to the right). Because uranium atoms are so large, the atomic force that binds it together is relatively weak, making uranium good for fission.

In nuclear power plants, neutrons collide with uranium atoms, splitting them. This split releases neutrons from the uranium that in turn collide with other atoms, causing a chain reaction. This chain reaction is controlled with "control rods" that absorb neutrons.

In the core of nuclear reactors, the fission of uranium atoms releases energy that heats water to about 520 degrees Farenheit. This hot water is then used to spin turbines that are connected to generators, producing electricity.

Mining and processing nuclear fuels

Uranium is one of the least plentiful minerals—making up only two parts per million in the earth's crust—but because of its radioactivity it is a plentiful supply of energy. One pound of uranium has as much energy as three million pounds of coal.

Radioactive elements gradually decay, losing their radioactivity. The time it takes to lose half of its radioactivity is called a "half life." U-238, the most common form of uranium, has a half life of 4.5 billion years.

Uranium is found in a number of geological formations, as well as sea water. To be mined as a fuel, however, it must be sufficiently concentrated, making up at least one hundred parts per million (0.01 percent) of the rock it is in.

In the U.S., uranium is mined from sandstone deposits in the same regions as coal. Wyoming and the Four Corners region produce most U.S. uranium.

The mining process is similar to coal mining, with both open pit and underground mines. It produces similar environmental impacts, with the added hazard that uranium mine tailings are radioactive. Groundwater can be polluted not only from the heavy metals present in mine waste, but also from the traces of radioactive uranium still left in the waste. Half of the people employed by the uranium mining industry work on cleaning up the mines after use.

The Department of Energy estimates that the U.S. has proven uranium reserves of at least 300 million pounds, primarily in New Mexico, Texas, and Wyoming. US power plants are using over 40 million pounds of uranium fuel each year. Much more uranium is likely to be available beyond our proven reserves.

Uranium comes in two forms, U-235 and U-238. As found in nature, uranium is more than 99 percent U-238; unfortunately, U-235 is what is used in power plants. U-238 can also be processed into plutonium, which is also fissionable.

Once mined, the uranium ore is sent to a processing plant to be concentrated into a useful fuel. There are 16 processing plants in the US, although eight are inactive. Most uranium concentrate is made by leaching the uranium from the ore with acids. (Sometimes the concentrate is made underground, without removing the uranium ore.) When finished, the uranium ore is turned into U3O8, the fuel form of uranium, and shaped into small pellets.

The pellets are then packed into 12-foot long rods, called fuel rods. The rods are bundled together into fuel assemblies, ready to be used in the core of a reactor.

As of 2012, over 80% of uranium purchased by civilian nuclear reactors was imported to the U.S., not mined domestically, creating a trade deficit. Main suppliers include Russia, Canada, Australia, Kazakhstan, and Namibia.

Nuclear reactors

There are currently 99 commercial nuclear reactors in operation in the United States. Over a dozen commercial reactors have been shut down permanently, with more retirements likely to be announced in coming years.

Most of the plants in operation are "light water" reactors, meaning they use normal water in the core of the reactor. Different reactor technologies are in use abroad, such as the "heavy water" reactors in Canada. U.S. reactors account for more than one-fourth of nuclear power capacity in the world.

In the United States, two-thirds of the reactors are pressurized water reactors (PWR) and the rest are boiling water reactors (BWR). In a boiling water reactor, shown above, the water is allowed to boil into steam, and is then sent through a turbine to produce electricity.

In pressurized water reactors, the core water is held under pressure and not allowed to boil. The heat is transferred to water outside the core with a heat exchanger (also called a steam generator), boiling the outside water, generating steam, and powering a turbine. In pressurized water reactors, the water that is boiled is separate from the fission process, and so does not become radioactive.

After the steam is used to power the turbine, it is cooled off to make it condense back into water. Some plants use water from rivers, lakes or the ocean to cool the steam, while others use tall cooling towers. The hourglass-shaped cooling towers are the familiar landmark of many nuclear plants. For every unit of electricity produced by a nuclear power plant, about two units of waste heat are rejected to the environment.

Commercial nuclear power plants range in size from about 60 megawatts for the first generation of plants in the early 1960s, to over 1000 megawatts. Many plants contain more than one reactor. The Palo Verde plant in Arizona, for example, is made up of three separate reactors, each with a capacity of 1,334 megawatts.

Some foreign reactor designs use coolants other than water to carry the heat of fission away from the core. Canadian reactors use water loaded with deuterium (called "heavy water"), while others are gas cooled. One plant in Colorado, now permanently shut down, used helium gas as a coolant (called a High Temperature Gas Cooled Reactor). A few plants use liquid metal or sodium.

Nuclear waste

By the end of 2011, over 67,000 metric tons of highly radioactive waste had been produced by US-based nuclear reactors. That increases by about 2,000 metric tons every year.

Before the mid-1970s, the plan for spent uranium was to reprocess it into new fuel.

Since a by-product of reprocessing is plutonium, which can be used to make nuclear weapons, President Carter ordered the end of reprocessing, citing security risks. Reprocessing also had a difficult time competing economically with new uranium fuel.

Since then, the Department of Energy has been studying storage sites for long-term burial of the waste, especially at Yucca Mountain in Nevada. Under the guise of research, DOE has built a full-scale system of tunnels into the mountain at a cost of over $5 billion.

Although Yucca Mountain has yet to be officially chosen, there are no other sites being considered.

Meanwhile, radioactive waste is being stored at the nuclear plants where it is produced. The most common option is to store it in spent fuel cooling pools, large steel-lined tanks that use electricty to circulate water. As these pools fill up, some fuel rods are being transferred to large steel and concrete casks, which are considered safer.

In addition to the spent fuel, the plants themselves contain radioactive waste that must be disposed of after they are shut down. Plants can either be disassembled immediately or can be kept in storage for a number of years to give the radiation some time to diminish. Most of the plant is considered "low level waste" and can be stored in less secure locations.

Currently, only two sites accept low level waste: Barnwell in South Carolina and Hanford in Washington. NRC estimates decommissioning costs to range from $133 million to $303 million per plant, but so far no large reactors have been decommissioned. A number are in storage awaiting decommissioning at a future time.

The rise of nuclear power

The principles of nuclear power were formulated by physicists in the early 20th century. In 1939, German scientists discovered the process of fission, triggering a race with US scientists to use the incredible power of fission to create a bomb.

Through the intense effort of the Manhattan Project, the atomic bomb was created by 1945, and used to destroy Hiroshima and Nagasaki at the end of World War II.

After the war, "great atomic power" was seen as a potential new energy source. The government's Plowshare Program thought atomic explosions would be a labor-saving way to dig canals and drinking water reservoirs and to mine for gas and oil. As late as the 1960s, bombs were being set off above and below ground to test different ideas.

By 1980, cratering explosions will probably be safe enough to take on mammoth excavation projects, such as the construction of a new shipping canal through the Isthmus of Panama. That job would be done with 651 H-bombs having a total power of 42 megatons. To build it with conventional explosives would cost nearly six billion dollars -- using nuclear blasts just a little over two billion. Excavating, which took almost 20 years for the old Canal, might take only five for the new one.

A more successful use of atomic power was in nuclear reactors. Admiral Hyman Rickover guided the development of small reactors to power submarines, greatly extending their range and power. The USS Nautilus was launched in 1954.

By the late 1950s, nuclear power was being developed for commercial electric power, first in England. Morris, Illinois, was the site of the first U.S. commercial reactor, the Dresden plant, starting in 1960. A plant at Shippingport, Pennsylvania, went on line in 1957, but was not commercially owned.

The head of the Atomic Energy Commission, Lewis Strauss, said in 1954 that "it is not too much to expect that our children will enjoy in their home electrical energy too cheap to meter." The AEC was an active promoter of nuclear power throughout the 1950s and '60s. At the same time, it was supposed to be the industry's regulator. As a result, many of the early safety concerns about nuclear power were suppressed. Any consideration of the long-term effects and hazards were downplayed.

In 1963, General Electric sold the Oyster Creek plant in New Jersey to General Public Utilities (later to own Three Mile Island) for $60 million, far less than the actual construction cost. Business Week described the plant as "the greatest loss leader in American industry," estimating that GE lost $30 million on the deal in order to win the bid. This started a trend of "turnkey" nuclear plants—plants that were sold to utilities only when fully completed. Such turnkey plants enabled the nuclear industry to get off the ground, with plant orders booming in the late 1960s.

The fall of nuclear power

After absorbing as many losses as they could, manufacturers ended turnkey offers. By the 1970s, about 200 plants were built, under construction or planned. But a number of factors conspired to end the nuclear boom.

First, cost overruns revealed the true cost of nuclear plants. Once utilities began building the plants as their own projects, their lack of experience with the technology, the use of unique designs for every plant, and a "build in anticipation of design" approach led to enormous cost overruns.

Because construction took years to complete, utilities found themselves with huge amounts of money invested in a plant before any problems developed. Cincinnati Gas and Electric, for example, went into debt by $716 million to build its Zimmer nuclear power plant, some 90 percent of the utility's net worth. Yet the utility canceled construction of the plant in 1983.

Second, energy prices rose quickly in the 1970s due to the OPEC oil embargo, coal industry labor problems, and natural gas supply shortages. These high prices led to improvements in energy efficiency and a declining demand for energy. After many years of 7% annual increases in electricity demand, annual growth fell to only 2% by the late 1970s. Since nuclear plants were large, often more than 1000 MW each, a slowdown in demand growth meant they were underutilized, further aggravating the debt burden on utilities.

Third, an increase in energy prices triggered an increase in inflation. High inflation meant high lending rates. Utilities deep in debt from nuclear plants saw interest rates rise, and were forced to raise electricity prices. State utility commissions, who paid little attention to utility finances in an era of declining rates, were suddenly keenly interested in utility decisions about power plant investments.

Fourth, critical utility commissions were less likely to pass on all investment costs to utility ratepayers. In New York, the commission ruled that a quarter of the cost of the Shoreham nuclear plant was not "prudently incurred," and forced a loss of $1.35 billion on utility stockholders. Investors quickly became leery of risky and large investments in nuclear power.

Fifth, public opposition to nuclear plants gained steam in the 1970s. Plants at Seabrook, New Hampshire, and Shoreham, Long Island, were the focus of intense anti-nuclear protests. By intervening in siting and licensing decisions, anti-nuclear groups, state and local governments were able to block or delay construction of plants.

In 1979, a reactor core meltdown at the Three Mile Island nuclear plant was simply the last of a series of problems for the industry. Closer scrutiny by the Nuclear Regulatory Commission forced plant builders to change designs in mid-stream. Although government regulation is blamed by nuclear advocates for the woes of the industry, the federal government has been the industry's strongest ally. Only after Three Mile Island was the "watchdog" willing to do its duty.

By the 1980s, the nuclear industry was in serious trouble. No new plants were ordered after 1978, and all those ordered since 1973 were later canceled. Forbes magazine reported in 1985 that a sample of 35 plants then under construction were expected to cost six to eight times their original price estimate, and to take twice as long to build as planned—from six years to twelve.

They called the nuclear power industry "the greatest managerial disaster in business history." In the end, 120 plants were canceled between 1972 and 1990, more than were built.

The future of nuclear power

US-based reactors were initially licensed to operate for up to 40 years. Beginning with the two reactors at the Calvert Cliffs nuclear plant in Maryland, many owners have applied to the NRC for permission to extend the reactor operation licenses for an additional 20 years.

Nearly three-quarters of the U.S. fleet have been re-licensed and the NRC is reviewing several other license renewal requests. A second round of 20-year license extensions is currently in discussion between the NRC, the Department of Energy, and the nuclear industry.

In 2012, the NRC issued licenses for two new reactors at the Vogtle nuclear plant in Georgia and two new reactors at the Virgil C. Summer nuclear plant in South Carolina, the first new reactor licenses to be issued in nearly two decades.

Authorization in 2012 to construct and operate four new reactors was offset by decisions in 2013 to permanently close four operating reactors—Crystal River Unit 3 in Florida, Kewaunee in Wisconsin, and San Onofre Units 2 and 3 in California. Stiff competition from natural gas factored into the decision to retire Kewaunee while unexpected problems encountered during the replacement of aging components led to the other three reactor closures. During 2013, it was also announced that a fifth reactor, the Vermont Yankee nuclear plant, would permanently shut down in fall 2014. Other reactors across the country have followed suit

The extent to which nuclear power remains a major U.S. energy source depends on many variables, including its role in fighting climate change, nuclear safety, cost, and the growth of other energy sources.